Forget Crazy Earth,

Look to the Future:

Voyager and Fortran 5

Early 2024

(Updated: 18-Jul-2025)

The Voyager spacecraft,

Voyager 1 and

Voyager 2, were

not programmed in Fortran 5. Or in any

Fortran for that matter. This widely accepted misbelief can be traced back

to a widely misunderstood statement in a single magazine article.

This is a personal project of mine. Everywhere I turned the past few

years, someone was saying that the Voyager spacecraft were programmed

in Fortran 5. I didn't believe it. I gathered a few links here and

there, but, in early 2024, I sat down and did some serious online

research. Well, kind of serious and not particularly systematic.

This is what I found. It's wordy, it rambles, and the writing is

somewhat uneven, but I hope you'll find the information useful or

at least follow the links in your own explorations. There are many

fascinating aspects of the Voyager project that one normally might

not think of and only learn of by accident. I think you'll find

that even casual browsing can yield rich rewards!

Sections 1 through 5, about 10 printed pages, are specifically about the

Voyager-Fortran 5 meme. Section 6 adds another 9 pages and seeks to

prevent memes such as this from arising. The remaining tens of pages began

as simply a source of more detailed information about things presented in

the earlier sections, things such as command sequencing and the AACS

computer. However, I gradually added more unrelated information such as

the flight computer electronics because (i) the information was interesting

and, as a justification in hindsight, (ii) collecting this information in

one place will save other Voyager tech fans the effort of, for example,

tracking down and connecting nuggets from obscure posts in lengthy online

discussions.

You'll find phrases like "apparently", "perhaps", "it seems", and

"it suggests" throughout the text. They mean I can't definitively

assert what the sentence is about to say. For example, a great deal

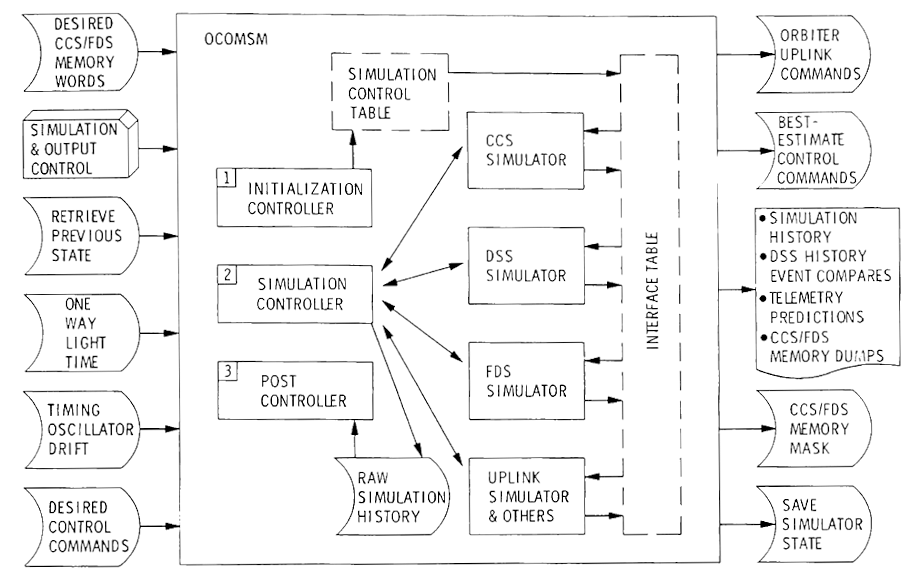

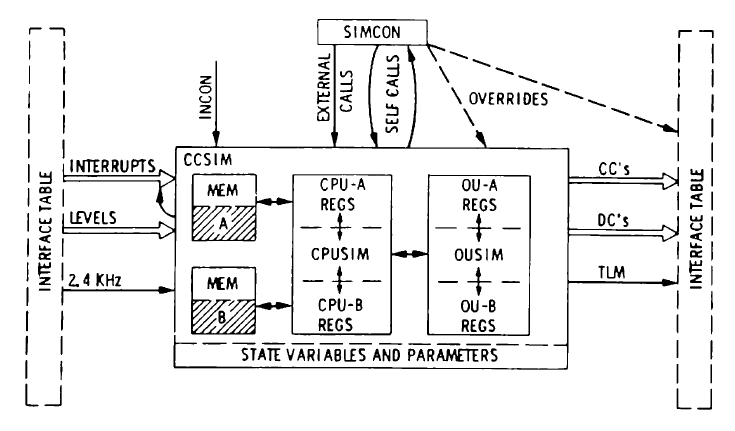

of my discussion of sequencing and simulation is based on McEvoy's

Viking Orbiter paper. There is no comparable Voyager paper that

provides his level of detail, so I can't with certainty say that

something done on Viking is done in the same way on Voyager—it

just seems likely! These uncertainties do not affect the

fact — and central thrust of this piece — that

the Voyager onboard computers were not programmed in Fortran.

My background: I'm a retired software developer. In the 1980s, I worked

on the image processing ground system for NASA's LANDSAT 4 and 5 remote

sensing satellites; we used VAX/VMS Fortran 77. In the late 1980s and

early 1990s, I worked on a configurable, Unix workstation-based, control

center for NASA's Goddard Space Flight Center, mostly written in C. In

the late 1990s through the mid-2000s, I worked on a similar system in C++

for commercial satellite fleets. (The latter was originally based on the

former thanks to NASA's generosity in sharing technology with industry.)

My brother, Andy, got interested in ham radio when we were in junior high

school and I picked up a rudimentary knowledge of electronics and radio

through osmosis. (Andy has an Extra Class license; originally WA3RML

from Maryland, he's now WA5RML in Texas.)

Acknowledgements: The bulk of this page was written in early 2024.

In the swirl of useful and not-useful papers and articles I read or skimmed,

bouncing here and there on the web, I generally didn't remember how I came

across individual sources and therefore I was unable to properly credit

people through whom I found many of these sources. Later in the year, I

began being more meticulous about recording the sources of the sources in

my (disorganized) offline notes and then crediting them on this web page.

My sincere apologies to those I failed to acknowledge in the past and to

those I may inadvertently fail to credit in the future. And many thanks

to all those who post good or even tentative bits of information in online

discussions and blogs.

The title of this piece is constructed from the titles of two songs by

Fortran

5 (Wikipedia) released on their 1991 album, Blues:

"Crazy Earth"

(YouTube) and

"Look To The

Future" (YouTube)!

1. Introduction

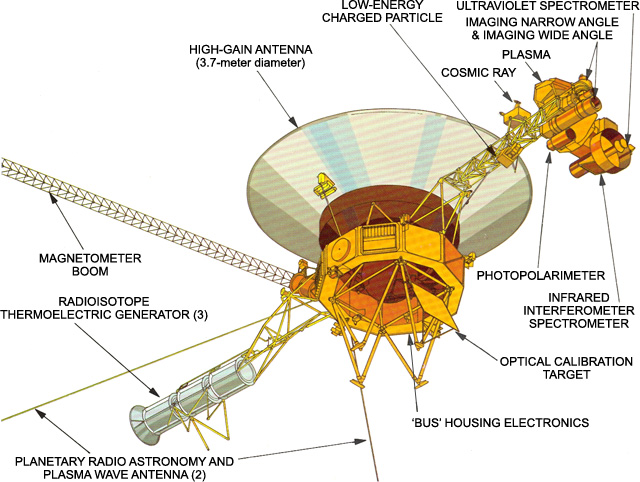

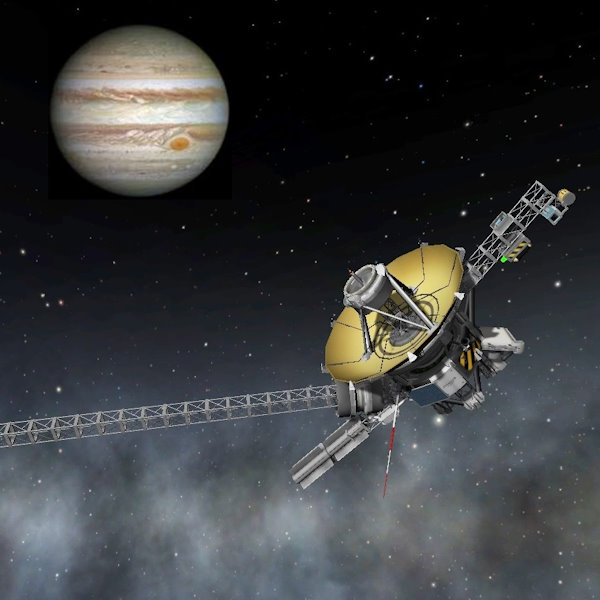

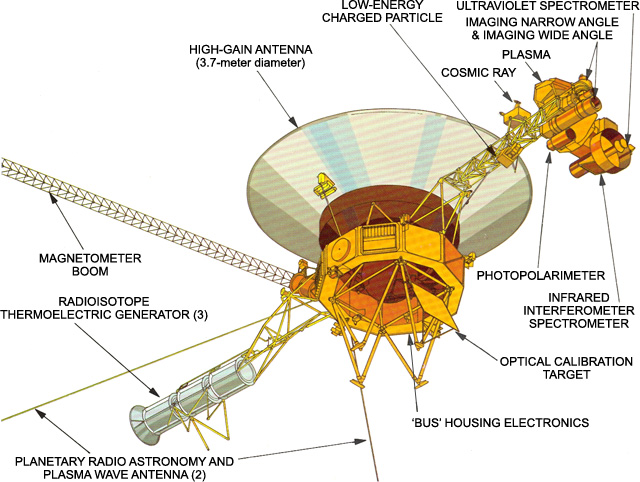

The Voyager program took advantage of a rare planetary alignment to send

two spacecraft on a tour of the solar system's gas giants: Jupiter, Saturn,

Uranus, and Neptune. In addition to the cameras and scientific instruments,

each Voyager has 3 computers (plus their backups, for a total of 6):

Computer Command Subsystem (CCS) - is the basically the master

controller of the spacecraft. As such, it "provides sequencing and

control functions. The CCS contains fixed routines such as command

decoding and fault detection and corrective routines, antenna pointing

information, and spacecraft sequencing information."

(Voyager: The

Spacecraft, JPL) The CCS CPU is the same as used on the Mars

Viking

Orbiters.

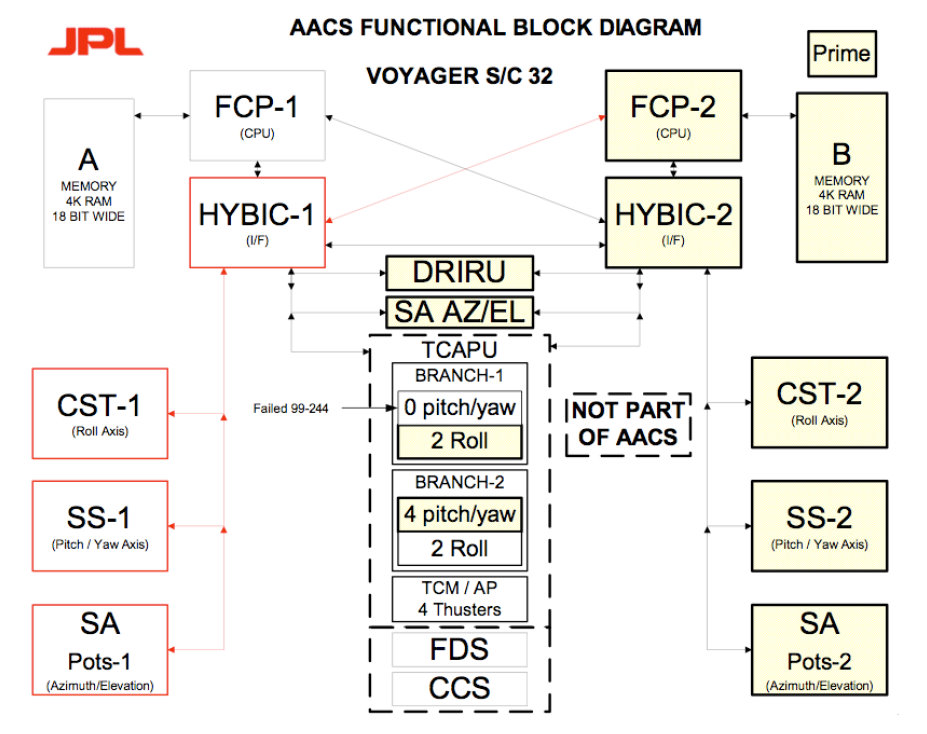

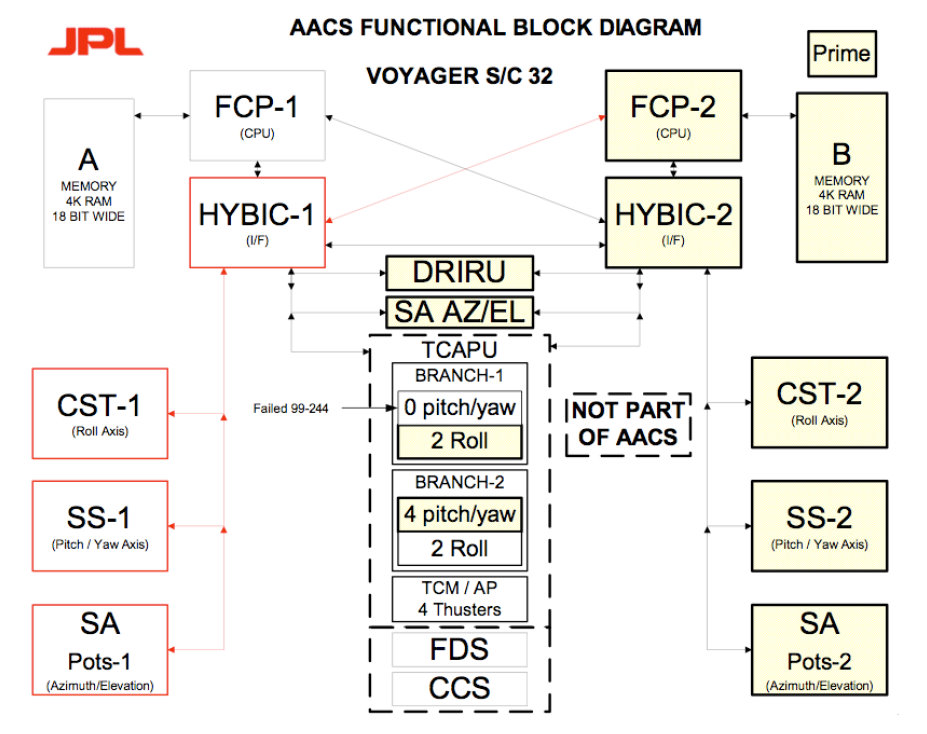

Attitude and Articulation Control Subsystem (AACS) - "controls

spacecraft orientation, maintains the pointing of the high gain antenna

towards Earth, controls attitude maneuvers, and positions the scan

platform." The cameras and a couple of other instruments are mounted

on the scan platform, hence adjusting their orientation must be done

in conjunction with the spacecraft's orientation.

(Voyager: The

Spacecraft, JPL) The AACS computer uses a modified CCS CPU and is

not the at-the-time experimental HYPACE computer. The latter

claim turned out to be an unexpected rabbit hole; see

"AACS, HYPACE, and HYBIC" below for more details.

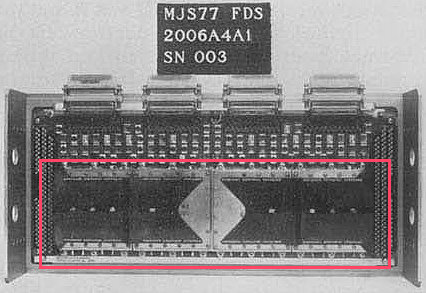

Flight Data Subsystem (FDS) - "controls the science instruments and

formats all science and engineering data for telemetering to Earth."

(pre-launch

Voyager

Press Kit, August 4, 1977, p. 22, PDF) The FDS used a custom-designed

CPU.

Dr. James E. Tomayko's

Computers in Spaceflight: The NASA

Experience (1988) is an oft-cited, authoritative (and

eminently readable) source of information about computing systems

in space up until 1988. The link takes you to the Bibliography

where further links to specific sections of the report can be found.

Chapter Six, Section 2 gives the history of and details about the

three Voyager computers. (Since the CCS computer was borrowed from

Viking, also see the more detailed description of the Viking Orbiter

CCS in Chapter Five, Section 6.)

And, expanding our vocabulary:

Flight Computers - are the computers onboard a spacecraft, like

the three above.

Flight Software - is the collection of programs and routines that

run on the flight computers. Like any computer program, the flight

software is composed of low-level CPU instructions such as

"Load CPU register A with the value at memory address 1234". Flight

software engineers write and maintain these programs.

Spacecraft Commands - are binary commands that perform operations

on the spacecraft. Uplinked to the spacecraft by mission control, they

are not CPU instructions. In a spacecraft without computers,

the commands are fed to a hardware decoder that effects the desired

operations. In a spacecraft with computers, the software interprets

the commands and runs the sometimes complex code needed to perform

the operations. (Think of the Unix bash shell, where the

"flight software" is the bash executable and the "spacecraft

commands" are the command-line commands: cd, ls, etc.)

Command Sequences - are sequences of spacecraft commands uplinked

to the spacecraft as a singular bundle. A given sequence may be executed

immediately, executed at a scheduled time, or executed only when the

spacecraft's hardware or software detects some condition. Sequence

engineers design and develop command sequences. (A simplified

definition of sequences; see

Sequences and Simulators below

for more details.)

In the telemetry data radioed back to Earth:

Engineering Data - has to do with the health and operation

of the spacecraft; e.g., temperatures, power levels, spacecraft

attitude, and readouts of flight computer memory for debug purposes!

Science Data - is that returned from the scientific instruments.

The prime example is the image data from the cameras.

And some other terms:

Uplink - upload/transmit data from Earth to a spacecraft;

e.g., send ground-generated commands up to Voyager.

Downlink - download/transmit data from a spacecraft to Earth;

e.g., send Voyager telemetry down to Earth.

A brief Voyager timeline (the fly-by dates are actually years of closest

encounters):

1977 - Voyager 2 and then Voyager 1 launched.

1979 - Both Voyagers fly by Jupiter. (V1 on March 5, V2 on July 9)

1980 - Voyager 1 flies by Saturn. (November 12)

1981 - Voyager 2 flies by Saturn. (August 25)

1986 - Voyager 2 flies by Uranus. (January 24)

1989 - Voyager 2 flies by Neptune. (August 25)

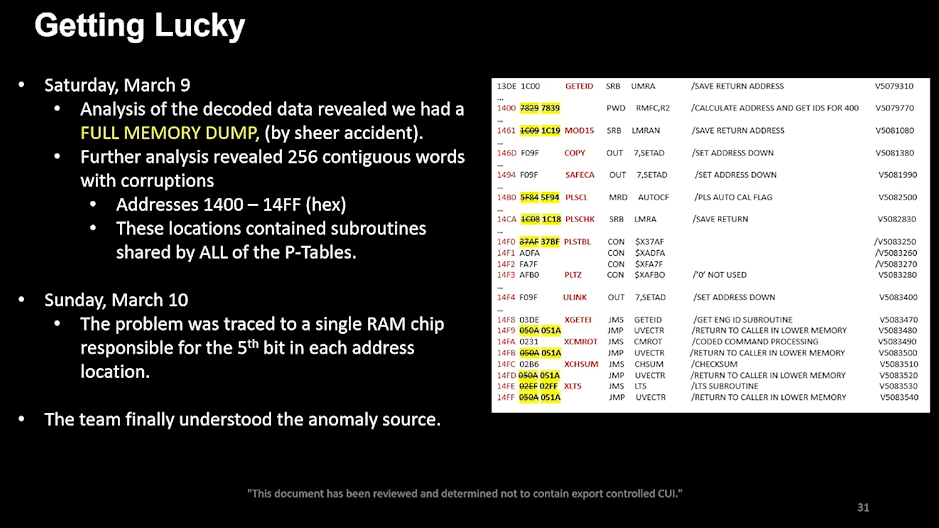

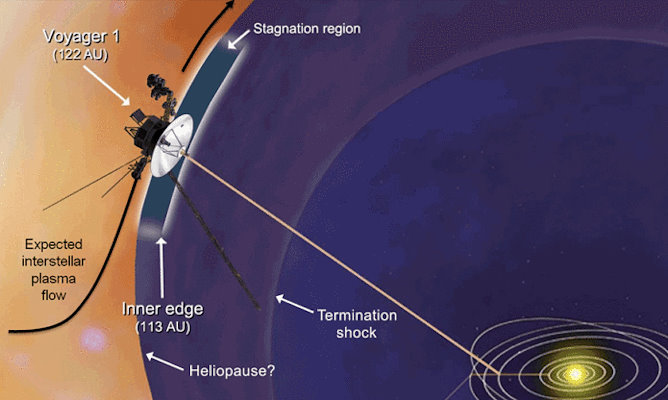

2004 - Voyager 1 crosses the termination shock.

2007 - Voyager 2 crosses the termination shock.

2012 - Voyager 1 enters interstellar space.

2018 - Voyager 2 enters interstellar space.

After the 1980 encounter with Saturn, Voyager 1 angled north

from the ecliptic (planetary) plane at about 35°. After the 1989

encounter with Neptune, Voyager 2 angled south of the ecliptic

plane at about -48°.

(Voyager

Fact Sheet, JPL)

Entering interstellar space means the Voyagers have emerged from the

heliosphere,

the region in which solar winds predominate. The spacecraft have

not yet escaped the Solar System, as the Sun's gravity still holds

sway. That will take another 30,000 years until the spacecraft

cross the outer edge of the hypothetical

Oort Cloud!

For a real treat, see the real-time

Voyager

Mission Status! I noticed that Voyager 1's distance from

Earth was rapidly decreasing (!) while Voyager 2's

was slowly increasing. At the same time, both spacecraft's distances from

the Sun were increasing at a constant rate as expected, both at about 10

miles every second. I eventually figured this out. (Completely missing,

like an idiot, the "Distance from Earth" link that pops up an explanation!

Well, it used to: NASA removed the link/popup in July 2024—why?)

Earth is traveling around the Sun at nearly twice the speed of the Voyager

spacecraft. For half of its orbit, Earth will be moving towards, for

example, Voyager 1 and is thus "catching up" to the

spacecraft. (That's a rough, simplified explanation. Given the Earth's

and Voyager 1's full 3-D velocities, you must separate out the

components along the changing Earth-Voyager 1 axis. When the

Earth's speed along that axis exceeds Voyager 1's, the

distance will decrease. So the distance will decrease on only a portion of

that half-year half-orbit.)

And the DSN Now

page shows the real-time status of the Deep Space Network antennas and

which spacecraft they are talking to, VGR1 and

VGR2 being the Voyager spacecraft.

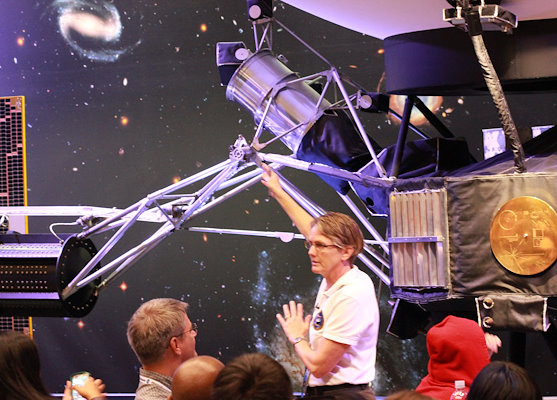

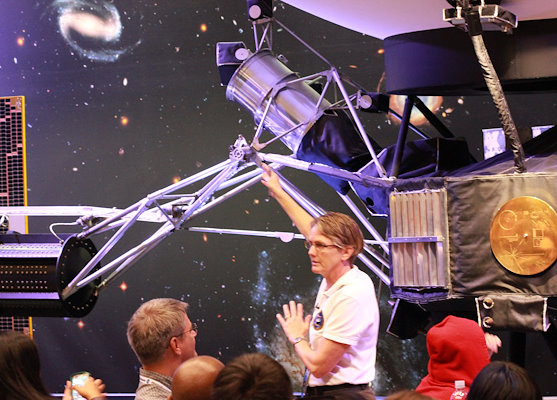

"Voyager Project Manager Suzy Dodd shows off her

spacecraft".

(Source: Bill_D@Flickr)

(click image to enlarge)

2. How It Started

Wired

In 2013, Wired published an article based on an interview with

Suzanne

Dodd, Voyager project manager at the time and, currently, also Director

of the Jet Propulsion's Interplanetary Network Directorate. In the article,

the author, Adam Mann, wrote (his words, not a direct quote from Dodd):

The spacecrafts' original control and analysis software was written in

Fortran 5 (later ported to Fortran 77). Some of the software is still

in Fortran, though other pieces have now been ported to the somewhat

more modern C.

—Adam Mann,

"Interstellar

8-Track: How Voyager's Vintage Tech Keeps Running", Wired,

September 2013.

My impression upon first reading this was that the ground-system mission

control and science data analysis software was written in Fortran and parts

were later ported to Fortran 77 and C. (I now think Dodd was referring to

mission control-related analysis instead of science data analysis.) I'd

never heard of "Fortran 5", but the rest was unremarkable and tracked with

my own experience. In the early 1980s, I worked on the image processing

side of the LANDSAT-4/5 ground system, programming in Fortran 77 on VAX/VMS

minicomputers. The mission-control developers on the other half of our

cubicle farm at NASA's Goddard Spaceflight Center programmed in Fortran

on DEC-2060 mainframe computers. And, in the late 1980s, I was part of

a small team developing a generic, UNIX workstation-based ground system,

TPOCC, for Goddard — programmed in C, of course!

So, aside from the "Fortran 5" oddity, Mann's article was spot-on and

provided an excellent update for me on the Voyager project. Others,

however, inferred more from the quote and, shortened to one sentence

with the "control and analysis" phrase dropped, a misleading form of

the quote spread in the popular press and in numerous hardware and

software forums. The inference or "meme", easily identified by the

"Fortran 5", is that the Voyagers' onboard computers were

programmed in Fortran, etc., etc., as shown here:

The initial software program was Fortran 5, then they were reprogrammed

during flight to Fortran 77, and finally C.

—South Australian Doctor Who

Fan Club Inc. (SFSA), "Voyagers disco party!",

The Wall of Lies, No. 169, Nov-Dec 2017, p. 2.

(4-page newsletter,

PDF)

(I'm not dumping on the fan club. For my example, the juxtaposition of

Doctor Who and disco practically screamed, "Choose me! Choose me!")

Popular Mechanics

I originally dismissed the following 2015 article in Popular

Mechanics as just another knock-off of the Wired article.

However, I was wrong and, upon rereading it more closely, I have to say

it is a superb article. Based on the author's own phone interview with

Suzanne Dodd, the article is about the Voyager project seeking a new

software developer to replace the soon-to-retire

Larry Zottarelli, "the last original Voyager

[software] engineer". From other information I gleaned from the web, it

appears he was one of the original flight software engineers, joining the

project pre-launch in the early-to-mid 1970s. Don't judge the article by

this isolated quote (again, the author's words, not Dodd's):

Know Cobol? Can you breeze through Fortran? Remember your Algol? Those

fancy new languages from the late 1950s? Then you might be the person for

the job.

—John Wenz,

"Why

NASA Needs a Programmer Fluent in 60-Year-Old Languages",

Popular Mechanics, October 29, 2015.

Between Wired and Popular Mechanics, I'm starting

to wish Suzanne Dodd had been a bit clearer in these interviews about what

the programming languages were used for. On the other hand, in her defense,

Dodd has spent decades having to and trying to convey scientific and technical

information in a meaningful way to the public and non-tech-oriented journalists

(in general; I don't mean Wenz).

In this particular case, I expect that knowledge of Fortran is

needed for some of the support software, but not for actually programming

the onboard computers. (Keep in mind that Fortran is not hieroglyphs!

Moderately experienced programmers should be able to pick it up fairly

easily.)

Aside: Algol? Really?! I have long taken some small measure of pride

in the fact that I am one of the few people in the U.S. who have actually

programmed in Algol! When I first caught the computer bug in 1977, I read

one of Daniel

McCracken's Fortran books and his Algol book ... before I even took my

first computer class and got access to a computer. In two classes where

the other students used Fortran, I used Algol because I wanted to try it

out — I liked it. This was the

Norwegian

University (NU) Algol 60 compiler on a Univac 1100-something mainframe.

Unrelated aside: In the Popular Mechanics article, Wenz

contrasts the Voyagers' programmability with that of other spacecraft

with fixed-hardware operation sequencers, such as ISEE-3. The generic,

UNIX workstation-based, misson control system I worked on at Goddard,

TPOCC (mentioned above), was used to replace ISEE-3's Xerox Sigma-based

(if I remember correctly) control system in the early 1990s! Launched

in 1978, ISEE-3 was repurposed in 1982 as the

International

Cometary Explorer (ICE) and underwent a complex set of maneuvers

leading to a rendevouz with Comet Giacobini-Zinner in 1985. (See this

cool

graphic of the maneuvers.) (The mission control system was called

the ICE/IMP control center, so it was controlling two spacecraft, the

second probably being the

Interplanetary

Monitoring Platform, IMP-8.)

3. What is Fortran 5?

A distinct detail in every replication of this meme is "Fortran 5". I haven't

used Fortran since the 1980s, but the unknown-to-me Fortran 5 was an immediate

red flag. In all the online discussions of this subject that I've seen,

no one questions "Fortran 5". Even in a

Fortran

Discourse thread where the users are very knowledgeable about Fortran.

It can't just be me ... please!

On Wikipedia's Fortran page, Fortran 5 is called an

obsolete

variant (i.e., non-standard version) of Fortran:

Fortran 5 was marketed by

Data General

Corporation from the early 1970s to the early 1980s, for the

Nova,

Eclipse,

and

MV

line of computers. It had an optimizing compiler that was quite good for

minicomputers of its time. The language most closely resembles FORTRAN 66.

Data General's Nova

840

minicomputer was introduced in 1973; a contemporaneous DG brochure,

"840:

The Loaded Nova", touts the Fortran 5 compiler in its feature list:

Fortran 5, an extremely thorough, multipass compiler that produces

globally optimized code that's nearly as efficient as assembly

language code

And Data General advertised Fortran 5 with

"It's

a Real Pig": "Pigs are the Smartest Animals in the Barnyard"! The

Fortran 5 compiler was slow because it was taking the time to produce

smaller and faster executables.

(Flickr

image)

Data General's 1978

Fortran

5 Reference Manual

(PDF)

lists 1972 as the first copyright date, incidentally the year the Voyager

project officially began.

4. Fortran V?

I've seen no mention of Data General computers in connection with the Voyager

project in my research, so the use of Fortran 5 on the project seems highly

unlikely.

My guess is that, when speaking to Wired's Adam Mann, Suzanne

Dodd said "Fortran Five", meaning "Fortran Roman Numeral Five",

or Fortran V. This is consistent with Fortran II being known as "Fortran Two"

and Fortran IV as "Fortran Four".

(Fortran III,

in case you're interested, was an unreleased, internal IBM compiler dating

to 1958.)

Fortran V, like Fortran 5, is one of Wikipedia's non-standard,

obsolete

variants of Fortran:

[In addition to Control Data Corporation,] Univac also offered

a compiler for the 1100 series known as FORTRAN V. A spinoff of Univac

Fortran V was Athena FORTRAN.

Now things fall into place. Remember that Suzanne Dodd spoke of "control

and analysis" software. She joined the Voyager team in 1984 as a sequence

engineer in the lead-up to Voyager 2's January 1986 fly-by

of Uranus. These engineers designed/developed sequences of spacecraft

commands for upload to the Voyagers ... work done on one or more Univac

1100-series mainframe computers. From an interview:

DODD: When I first started, I started on a— I don't even remember

the name of it, but I do recall it had an eight-inch floppy drive.

That was our command medium, an 8-inch floppy drive. Not a memory

stick, not even a CD. When we did these designs and plots that

showed—there was a program that you could design an observation,

like you want to make a mosaic over Uranus, and you could lay out the

observation, and then it would tell you what the commands you need

to do it are. That was done on a UNIVAC computer,

so kind of more of a mainframe refrigerator-size computer.

David Zierler,

"Suzy

Dodd (BS '84), Engineer and Deep Space Pioneer", 2023

Caltech Heritage Project, June 9, 2023.

I found more specific information about JPL's mission control computing

facilities in a pre-launch press kit

(Voyager

Press Kit, August 4, 1977, pp. 106-107, PDF):

Mission Control and Computing Facility (MCCF) - three IBM 360-75

mainframes used for the day-to-day operations of the spacecraft,

including command uploads and tracking.

General Purpose Computing Facility (GPCF) - three Univac 1108

mainframes used for "navigation and mission sequence systems ... [and]

prediction programs and detailed spacecraft engineering performance

analysis". These are the systems that Suzanne Dodd would have used.

(Assuming JPL hadn't upgraded the 1108s to newer models in the

intervening 7 years.)

Mission Test and Computing Facility (MTCF) - "three strings of

UNIVAC and Modcomp computers to receive, record, process and display"

the engineering and science data downloaded from the spacecraft.

(The Univac computers here were minicomputers such as the

UNIVAC 1230. The

Modcomp minicomputers

included the MODCOMP II and MODCOMP IV; for some reason,

Tomayko [Chapter 8, Section 3, p. 265] calls them

the ModComp 2 and 4, respectively, though everyone else on the web uses

the Roman numerals!)

Sun Kang Matsumoto is a CCS flight software engineer who joined the Voyager

program in 1985, also, like Dodd, in the ramp-up for the Uranus fly-by. In

a 2016 paper about the Voyager Interstellar Mission (VIM), she gives some more

details about the evolution of Dodd's "control and analysis" software from

Univac to Unix (and thus from Fortran to C, presumably):

During the prime mission and early VIM, Voyager had been using the

JPL-developed software programs called SEQTRAN (to generate sequences)

and COMSIM (to simulate sequences and CCS FSW changes). They ran on

now-antiquated UNIVAC mainframe computers. Shortly after the start

of VIM [in 1990], these programs were converted over to more

modern UNIX-based SEQTRAN and High Speed Simulator (HSSIM). They

were rewritten to maintain the same functionality of the old SEQTRAN

and COMSIM, and tailored for VIM. Rewriting and testing required

significant effort from the developers and the project personnel;

however, the end result is much improved speed and efficiency.

Sun Kang Matsumoto,

"Voyager

Interstellar Mission: Challenges of Flying a Very Old Spacecraft on

a Very Long Mission" (PDF), 2016

p. 4, 2016 SpaceOps Conference, Daejeon, Korea.

SEQTRAN and COMSIM are programs Dodd would have used as a sequence engineer

in the 1980s. I'm a connoisseur of good program names, so I can't fail to

mention Matsumoto's mention of two new programs: VAMPIRE (Voyager Alarm

Monitor Processor Including Remote Examination) and MARVEL (Monitor/Analyzer

of Real-time Voyager Engineering Link)! Matsumoto's paper is well-worth

reading not only for the technical information, but because she provides

a valuable portrayal of how the Voyager team has managed to overcome, by

hook and by software crook, the very real problems of aging hardware at a

great distance. And have done so despite steep technological and budgetary

challenges.

I think (or hope) it is clear by now that, in the 2013 Wired

article, Suzanne Dodd was referring to the ground system software and not

the software running on the flight computers in space.

Okay, Univac and Fortran. But what about Fortran V? I could only find

one instance of "Fortran V" being explicitly mentioned in connection with

a Voyager mission operations program. (I do not include academic papers

whose authors used Fortran V programs to analyze the Voyagers' science data

at institutions outside of JPL; e.g., universities.)

The scarcity of "Fortran V" and "Voyager" on the web is not

surprising. Most programming languages maintain backwards compatibility

between versions, so, for example, a Fortran IV program usually can be

recompiled with a Fortran V compiler without error and with identical

functionality. Consequently, programmers do not in general specify versions

when speaking about languages. A C programmer doesn't say, "I wrote this

program in C89 and later ported it to C99." Instead, it's just an unadorned

"C program". (This is not a slight on the Dodd-Mann remark.)

Also, Univac's Fortran V compiler was available as early as

1966,

so it probably would have been the default Fortran compiler on Univac mainframes

throughout the 1970s. Many Fortran users may therefore have been unaware that

the specific version they were using was Fortran V. (The documentation that I

could find online showed that a generic @FOR invoked the Fortran V

compiler and @FTN invoked the ASCII Fortran compiler discussed in

the second aside below.)

Aside: I anticipate eventually having to eat my pontificating words on

programming language versions! In the meantime ... I couldn't find an

online copy of Univac's Fortran V manual, but the 1970

UNIVAC 1108 System

Description (Section 10, p. 7) had this to say: "FORTRAN V,

being an outgrowth of the earlier FORTRAN languages (in particular,

UNIVAC 1107 FORTRAN IV and IBM FORTRAN IV as announced in IBM form

C-28-6274-1), accepts these languages as compatible although the

reverse is not necessarily true."

Aside: The transition to Fortran 77 on a Univac mainframe would have

been non-trivial. Univac's Fortran 77 compiler, ASCII FORTRAN, largely

accepted Fortran V source code with a few, documented, mostly

Univac-specific exceptions. The big problem, I think, would have been

character sets. Univac's 36-bit mainframes used 6-bit

FIELDATA

characters stored 6 per 36-bit word. The ASCII FORTRAN compiler used

the incompatible 7-bit ASCII character set. (On the Univac 1108

computer I worked on c. 1980, ASCII characters were stored as 9-bit

quantities, 4 per word.) Vanilla character strings in the source code

would just be converted to ASCII without complaint by the compiler,

but programs that depended on specific characteristics of FIELDATA

characters would require changes. That includes programs that must

read or write FIELDATA-compatible tapes. (I have no experience with

ASCII FORTRAN, so these are just my thoughts from perusing the

ASCII

FORTRAN Reference Manual; Appendix A addresses the differences

between FORTRAN V and ASCII FORTRAN.)

Unrelated aside: Circa 1980, I overheard a graduate student remark that

the shell pipe, |, was Unix's gift to mankind and

@ADD was Univac's gift to mankind. He was right!

The Voyager ground-system software I found that was explicitly stated to

have been written in Fortran V is the Orbit Determinaton Program (ODP),

which was also used on other missions. The mathematics in the following

1983 paper is way beyond me, but it establishes the ODP's host system:

To give an idea of the computational burden that is involved, consider a

typical radiometric SRIF/SRIS solution with 67 state variables (Table I).

This model contains only 4 process noise states (line-of-sight acceleration

and 3 camera pointing errors); there are 3500 data points and 132 time

propagation steps. The problem run on a UNIVAC 1110, in double precision,

used 275 CPU s[econds] for filtering; smoothed solutions and covariance

computation used 265 CPU s[econds]. The entire run scenario including

trajectory variational equation integration, observable partials

generation, solution mapping, and generation of smoothed residuals used

4320 CPU s[econds].

...

We note in closing this factorization algorithm discussion that the SRIF

and U-D algorithms that were used in this application have been

refined and generalized, and are commercially available in the form of

portable Fortran subroutines.

—James K. Campbell, Stephen P. Synnott, and Gerald J. Bierman;

"Voyager

Orbit Determination at Jupiter" (PDF), IEEE Transactions on

Automatic Control, Vol. AC-28, No. 3, March 1983, pp. 259-261.

For those not mathematically inclined, the paper is still worth skimming

just for the enumeration of some of the esoteric details of the Voyager

spacecraft that they had to account for in the calculations. We learn

what language was used in a 2008 presentation by Lincoln J. Wood of JPL:

The mainframe computers used include the IBM 7090, IBM 7094,

UNIVAC 1108, and UNIVAC 1100/81. During the 1980s the ODP was

transported to minicomputers, with the software maintained in

both mainframe and minicomputer operating environments to

fulfill the desires of various flight projects. The ODP

consisted of 300,000 lines of code as of 1979, with FORTRAN V

being the primary language.

—Lincoln J. Wood,

The

Evolution of Deep Space Navigation: 1962-1989" (PDF), p. 6,

31st Annual AAS Guidance and Control Conference,

2008, Breckenridge, Colorado.

Shortly after writing the above, I came across this entry in NASA's long-gone,

COSMIC software catalog:

Calculating Trajectories and Orbits

Two programs calculate the motions of spacecraft and landers.

The Double Precision Trajectory Analysis Program, DPTRAJ, and the

Orbit Determination Program, ODP, have been developed and improved

over the years to provide the NASA Jet Propulsion Laboratory with

a highly reliable and accurate navigation capability for their deep

space missions like the Voyager. DPTRAJ and ODP are each collections

of programs that work together to provide the desired computational

results. DPTRAJ, ODP, and their supporting utility programs are

capable of handling the massive amounts of data and performing the

various numerical calculations required for solving the navigation

problems associated with planetary fly-by and lander missions.

They were used extensively in support of NASA's Voyager project.

...

DPTRAJ-ODP is available in two machine versions. The UNIVAC version

(NPO-15586) is written in FORTRAN V, SFTRAN, and ASSEMBLER. (A processor

is supplied for SFTRAN, a structured FORTRAN.) DPTRAJ and ODP have been

implemented on a UNIVAC 1100-series computer. The VAX/VMS version

(NPO-17201) is written in FORTRAN V, SFTRAN, PL/1 and ASSEMBLER. It was

developed to run on all models of DEC VAX computers under VMS and has a

central-memory requirement of 3.4 Mb. The UNIVAC version was last updated

in 1980. The VAX/VMS version was developed in 1987.

—NASA Tech Briefs, September 1989,

p. 33.

A whopping 3.4 MiB of memory required for a lousy 300,000-line program?

Software hogs like that were the reason why we couldn't have nice things

back then ...

5. So What Language was Used?

Assembly Language(s)!

Let's ask an actual Voyager flight software engineer what language was and

is used to program the onboard computers. Perhaps the Voyager Fault

Protection and CCS Flight Software Systems Engineer who's worked on

Voyager since 1985:

Both the AACS and FDS use assembly language. The CCS uses assembly

language and Voyager-unique pseudo code (interpreter). As a result,

it is difficult to attract younger programmers to join the project.

Sun Kang Matsumoto,

"Voyager

Interstellar Mission: Challenges of Flying a Very Old Spacecraft on

a Very Long Mission" (PDF), 2016

p. 6, 2016 SpaceOps Conference, Daejeon, Korea.

In the acknowledgements at the end of the paper, Matsumoto thanks fellow

flight software engineer Larry Zottarelli,

now retired, and Suzanne Dodd, among others, for reviewing the paper.

I assume Zottarelli, Dodd, or one of the others would have spoken up

if they took issue with the above paragraph.

Along with Tomayko's report, Matsumoto's paper was the key source

for this page and answered many of my questions. Many thanks to

Vincent Magnin for sharing this link with

myself and others!

That would be two or three assembly languages: one for the CCS CPU,

possibly a variant for AACS's modified CCS CPU, and definitely another

for the completely different FDS CPU.

CCS Pseudocode

The phrase "pseudo code (interpreter)" made me think of an embedded

scripting language, but that seemed unlikely given the limited amount of

memory in the CCS computer. I puzzled over this for a long time until I

came across a 1997 paper by Rudd et al. Activities onboard the

spacecraft are controlled by sequences. I describe them in more

detail in my Sequences and Simulators

section, but, greatly simplified, a sequence is a list of spacecraft

commands uplinked from Earth that the CCS computer reads and executes.

When the spacecraft were flying by planets (Voyager's prime mission),

sequences could be lengthy and complex. After the planetary encounters,

the two spacecraft veered away from the ecliptic and began what is called

the Voyager Interstellar Mission (VIM), the subject of Matsumoto's

paper. At the beginning of VIM, c. 1990, a higher-level "sequencing

language" was apparently designed and implemented via a pseudocode

interpreter on the CCS computer (and presumably sequence-generation

software on the ground):

The fourth element [of the VIM sequencing strategy] is the use

of pre-defined and validated blocks of commands (high level sequencing

language), rather than the optimized sequence of individual commands

(low level sequencing language) used during the prime mission, to

accomplish desired spacecraft functions. While the use of pre-defined

blocks of commands greatly reduces the effort required to generate and

validate a sequence of commands, there is sometimes an inefficiency

in the number of memory words needed to accomplish a given function.

Fortunately, the VIM science data acquisition requirements and

available on-board sequence storage memory supports the use of

pre-defined blocks of commands.

Baseline Sequence

The baseline sequence is the set of instructions stored in the CCS

memory and is composed of the repetitive spacecraft activities that

execute continuously to return the basic fields, particles, and waves

science data. Eleven predefined spacecraft block routines (equivalent

to software subroutines) also stored in the CCS memory are used by the

baseline sequence to accomplish the desired spacecraft activities.

R.P. Rudd, J.C. Hall, and G.L. Spradlin,

"The Voyager

Interstellar Mission" (abstract), 1997

p. 391,

Acta Astronautica, Volume 40, Nos. 2-8, January-April 1997.

(doi:10.1016/S0094-5765(97)00146-X)

(I don't know if the predefined blocks in the Baseline Sequence paragraph

are among the predefined blocks in the first paragraph.) No more details

or a rationale for pseudocode were provided in the papers. Both Matsumoto

and Rudd et al stress the simpler, repetitive nature of spacecraft

operations in VIM, as opposed to the complex, unique sequences of operations

when flying by a planet. For the latter, there was a rich set of complex

tools for developing sequences on the Univac mainframes

(Brooks, 1988), some of which were ported to Unix

in the move off the mainframes. I wonder if JPL, foreseeing difficulty

in maintaining Voyager-specific sequencing expertise with limited staff

over the coming decades, sought a simpler means of scripting operations

on the spacecraft (substantially reducing or even eliminating the need

for creating new sequences). Rudd et al do hint at this awareness

on JPL's part elsewhere in their paper:

The transition from the Voyager prime mission to the Voyager Interstellar

Mission included significant changes in all areas of mission operations.

Initially these changes included:

- greatly reduced flight team staffing consistent with an extended mission

of reduced complexity;

...

- extensive re-engineering of the Mission Sequence Software (MSS) to

simplify the sequence generation process, and conversion (where feasible)

from a main frame computer to a networked personal computer (PC) based

environment;

...

- implementation of a new spacecraft and instrument sequencing technique

consistent with the repetitive nature of the planned science data

acquisition strategy.

R.P. Rudd, J.C. Hall, and G.L. Spradlin,

"The Voyager

Interstellar Mission" (abstract), 1997

p. 388,

Acta Astronautica, Volume 40, Nos. 2-8, January-April 1997.

(doi:10.1016/S0094-5765(97)00146-X)

When I said a pseudocode interpreter was unlikely in the CCS computer's

4K 18-bit words of memory, I was keeping in mind how the computer had

been chronically short of memory for storing sequences. I am well

aware of interpreters being implemented in constrained memory systems,

having been enthralled in my early computer days by Larry Kheriaty's

256-byte PILOT interpreters (6800 and 8080) in the September 1978

issue of BYTE magazine,

"WADUZITDO: How To Write a Language in 256 Words or Less",

and having read about Tiny BASICs

(Wikipedia)

in Dr. Dobb's.

AACS Power Codes

Before discovering Rudd et al's paper, one pseudocode possibility

I considered was power codes, which the CCS receives from the AACS

and must "interpret" or act upon:

A "power code" is a 6 bit message sent to the CCS computer, which may be

only informational or may cause a power command to switch power to an AACS

component. Such power switching commands are usually the means by which

redundant elements are exchanged. These power codes are an important part

of the fault protection logic, allowing the CCS computer to issue commands

in response to a fault condition. These commands may be a simple power

command (A gyro on) or a command sequence which will turn the spacecraft in

a pattern designed to re-oriented the spacecraft towards the sun from an

entirely random attitude. Some serious faults result in an OMEN power

code, which causes CCS to save the next three power codes (normally lost)

for later analysis.

Assessment of Autonomous Options for the DSCS III Satellite

System, 1981

Prepared for the U.S. Air Force by JPL personnel (Donna L. S. Pivirotto

and Michael Marcucci?),

"Volume III:

Options for Increasing the Autonomy of the DSCS III Satellite" (PDF),

p. 179, Aug. 6, 1981.

Calling power codes "interpreted pseudocode" was a stretch and I couldn't

even convince myself that this was the case. Leaving that aside, Matsumoto

discusses a problem with phantom commands in the "power decoder relay matrix":

The power decoder relay matrix problem that first manifested in 1998 makes

commanding of the spacecraft extremely difficult. Basically, the faulty

decoder may cause an issuance of extraneous power commands in addition to

the intended command.

Sun Kang Matsumoto,

"Voyager

Interstellar Mission: Challenges of Flying a Very Old Spacecraft on

a Very Long Mission" (PDF), 2016

p. 8, 2016 SpaceOps Conference, Daejeon, Korea.

I wonder if this is the interface used to convey the power codes from the AACS

to the CCS. Later, she describes a patch for this error made to

AACSIN, the CCS routine that she notes receives and processes

power codes, but the specific description of the patch again uses the term,

"power commands".

6. Why Assembly?

Since so many commenters in discussion forums had no problem with "Fortran 5",

it might be valuable to examine some possible reasons why the flight software

was written in assembly language.

The CPUs

CCS

There is not much information available specifically about the Voyager

CCS computer, borrowed from the Viking Orbiter. Fortunately,

Tomayko does provide details about the Viking CCS:

In general, the design of the processor was exceedingly simple, yet fairly

powerful, as indicated by the use of direct addressing, a minimal set of

registers, and a reasonably rich set of 64 instructions. The key is that

the design placed relatively light demands on spacecraft resources while

replacing both the programmable sequencer and the command decoder used

in the Mariners. The fact that the processor was later adopted by the

Voyager project as its Command Computer and modified for use as the

attitude control computer is not only a statement of JPL's frugality

but also a testament to the versatility of the design.

(p. 157)

[On the Viking Orbiter, the] Command Computer's central processor contained

the registers, data path control and instruction interpreter. The machine

was serial in operation, thus reducing complexity, weight, and power

requirements. It had 18-bit words and used the least significant 6 bits

for operation codes and the most significant 12 for addresses, as numbered

from right to left. This permitted 64 instructions and 4K of direct

addressing, both of which were fully utilized. Data were stored in signed

two's complement form, yielding an integer range from -131,072 to +131,071.

Average instruction cycle time came to 88 microseconds. Thirteen registers

were in the Command Computer, mostly obvious types such as an 18-bit

accumulator, 12-bit program counter, 12-bit link register that pointed to

the next address to be read, and a 4-bit condition code register that

stored the overflow, minus, odd parity, and nonzero flags.

(Box 5-3, p. 159)

James E. Tomayko,

Computers in Spaceflight: The NASA Experience, 1988

Chapter

Five, Section 6.

Note the 12-bit addresses, giving an addressing range of 0..4095. And note,

in the following, that the Viking CCS flight software engineers masked most

interrupts in routines, thus simplifying code (which reduced memory usage)

and increasing performance:

Viking Orbiter software had to be written in an assembler, which

fortunately had relocatable addresses, simplifying the maintenance task.

The 64 instructions were mostly common to other computers, but there was no

multiply or divide. There were two sets of loads, stores, increments, and

subroutine calls: one used during independent operation and one aimed at

dual operation, so that the two memories could be kept equivalent. Even

though many interrupts were available, most routines as coded had all but

the internal error and counting interrupts disabled. Many routines were

free to run out without being interrupted, in contrast to the highly

interrupted Apollo and shuttle software. Programmers avoided the memory

and processing time overhead required to preserve the current accumulator

and register contents during an interrupt.

James E. Tomayko,

Computers in Spaceflight: The NASA Experience, 1988

Chapter

Five, Section 6, Box 5-4, p. 162.

AACS

The Voyager AACS computer uses a modified CCS CPU (e.g., with the added

index register), so most of the CCS details above apply as well to the AACS:

JPL's Guidance and Control Section wanted to use a version of HYPACE as

the computer for the Voyager. However, there was considerable pressure to

build on the past and use existing equipment. [Edward] Greenberg proposed

using the same Viking computer in all systems on the Voyager spacecraft

that needed one. A study showed that the attitude control system could

use the CCS computer, but the Flight Data System could not due to high

I/O requirements ...

Guidance and Control grudgingly accepted the CCS computer on the condition

it be speeded up. Requirements for active control during the kick stage

burn meant that real-time control programs would have to be written to

operate within a 20-millisecond cycle, roughly three times faster than

the command computer ... Guidance and Control asked for a 1-megahertz

clock speed but wound up getting about three quarters of that. The

attitude control engineers also added the index registers that proved

so useful during the HYPACE experiment. Documentation for the system

still refers to the attitude control computer as HYPACE, even though

its heart was the command computer.

James E. Tomayko,

Computers in Spaceflight: The NASA Experience, 1988

Chapter

Six, Section 2, pp. 177-178.

(In Box 6-1 on the next page in the report, Tomayko uses "HYPACE" to refer

to the AACS despite just pointing out that the term is a misnomer.)

FDS

The FDS CPU was developed especially to meet the high-rate data handling

requirements of the Voyager spacecraft. Designed by Jack L. Wooddell,

the CPU, in the form of a breadboard prototype, evolved in a collaboration

between Wooddell and flight software engineer Richard J. Rice. For some

reason, I picture Wooddell seated in front of a really large breadboard

with a soldering iron in one hand and a wirewrap tool in the other. Rice

is standing beside him with an open laptop in the crook of his left arm,

his right hand is typing on the keyboard, and the changing flight software

is downloaded via wi-fi to the breadboard computer for testing. Wrong era,

I realize, and Rice may well have been left-handed!

Voyager's data computer is different from most small general-purpose

computers in several ways. Its special registers are kept in memory,

permitting a large number (128) of them. Wooddell also wrote more powerful

shift and rotate instructions because of data-handling requirements.

Despite its I/O rate, the arithmetic rate is quite slow, mostly due to

byte-serial operation. This meant 4-bit bytes are operated on in sequence.

Since the word size of the machine is 16 bits, it takes six cycles to do an

add, including housekeeping cycles. If all the arithmetic, logic and

shifting were not done in the general registers, the machine would have

been even slower. Reflecting its role, in addition to the usual ADD, SUB,

AND, OR, and XOR instructions found on most computers, the data computer

has many incrementing, decrementing, and branching instructions among the

36 defined for the flight version of the machine.

Overall, the Flight Data System requires 14 watts of power and weighs 16.3

kilograms. Its computer needs just one third of a watt and 10 volts, less

than the power required for a temperature sensor! At first the estimated

throughput required was 20,000 16-bit words per second. By flight time,

the instruction execution rate was 80,000 per second, with data rates of

115,000 bits per second, much higher than previous Flight Data Systems.

James E. Tomayko,

Computers in Spaceflight: The NASA Experience, 1988

Chapter

Six, Section 2, Box 6-2, p. 184.

Note that Tomayko uses the term byte-serial for non-8-bit "bytes", in

this case, when describing how the FDS CPU's arithmetic logic unit processes

16-bit operands 4 bits at a time. The last two sentences about throughput,

while not wrong, seem to ask the reader to compare apples and oranges.

The breadboard prototype began with the same 4K-by-18-bit plated wire

memory as the CCS computer. I don't know if this means the CPU was

originally designed as a 18-bit processor, but, in any case, it ended

up as a 16-bit processor with 8K-by-16-bit CMOS memory. From Tomayko's

description on p. 183 of Chapter 6, Section 2, my impression is that

the CPU used the same 12-bit addresses as the CCS, but two,

independent-of-each-other address lines were added that effectively

provided a 13-bit (8K) address range for instruction fetches and a 13-bit

(8K) address range for data accesses. The 13th

address bits have to be explicitly set/reset in a separate operation by the

programmer; see the OUT instructions in the code listings under FDS Flight Software. Switching execution

(instruction fetches) from one memory bank to the other is not as

straightforwward as switching data banks, however, as the programmer

probably doesn't want execution to begin at the exact same program counter

offset in the new bank as the old. The FDS CPU solves this problem by

delaying the new 13th address bit going into

effect until the next jump instruction.

If my understanding of Tomayko's description on p. 183 is correct, an FDS

CPU can run code anywhere in its lower and upper 4K words of RAM and,

likewise, can read and write data anywhere in those lower and upper 4K of

RAM. (Accessing a sequence of code or data that crosses the 4K boundary

may or may not be seamless depending on the computer architecture.)

About a month after I wrote the previous paragraph, a commenter in an

online discussion (probably about the Voyager team's workaround for the

November 2023, Voyager 1 FDS memory problem) said that code

is stored read-only in the lower 4K of RAM and the data is stored in

the upper 4K of RAM. I thought either the commenter had a different

interpretation of p. 183 or that JPL simply followed a convention of

maintaining a strict separation of code and data. (Tomayko doesn't

mention read-only.) However, rereading Tomayko another month later

revealed an interesting twist I had overlooked.

Tomayko has three relevant descriptions of FDS memory in Chapter Six,

Section 2:

... JPL's project staff believed that equipment would last longer if

unpowered. Although both CCSs are always powered, rarely are both

Flight Data Systems running, and both attitude control computers are

never turned on at the same time. Full bit-for-bit redundancy is not

maintained in the dual memories. For example, "expended" algorithms,

such as the deployment sequence executed shortly after separation from

the booster, need not be maintained. Both memories are accessed by

the single active processor in each system. The Flight Data System

keeps a copy of its instructions in both memories, but intermediate

data and variables can be stored in either memory.

(p. 174)

... Along with the new chips, the memory changed with an expansion to 8K.

Two "external" address bits were added to flag whether the top or bottom

half of the memory is being accessed. One bit is used to select the

memory half used for data access; the other, for the half used for

instruction access.

(p. 183)

... Also, since the machine can directly address the lower 4K of memory,

programs were to be kept there, with the upper portion for transient

data. Later the flight configuration of the computer evolved to one

processor accessing both memories. Therefore, a copy of the programs

is kept in the lower portion of each memory, but both upper portions

are usable by the single processor as a scratch pad. If dual mode is

required, the memories are separated.

(p. 185)

James E. Tomayko,

Computers in Spaceflight: The NASA Experience, 1988

Chapter

Six, Section 2.

According to the descriptions on p. 174 and p. 185, an FDS CPU runs code

from its own lower 4K of RAM and can read and write data both in its own

upper 4K of RAM and in the other CPU's upper 4K of RAM. So 4K of code

and 8K of data. Note that this is not an increase in physical

memory — each CPU still only has 8K of RAM and the first CPU

is just borrowing 4K from the second CPU. If Tomayko's description on

p. 183 of the two additional address bits is accurate and these bits only

select the lower and upper banks of a CPU's own 8K of RAM, it is not clear

how an FDS CPU addresses the upper 4K of the other CPU's RAM.

Or, more generally, how any of the spacecraft's CPUs —

CCS, AACS, or FDS — access their alternate CPU's RAM,

as the description on p. 174 seems to suggest they are all capable

of doing. On a related note, how are software (and associated data)

updates received from the ground routed to and stored in their

target CPU's memory? For example, if the active CCS computer

is the one that receives and interprets an upload as a software

update for FDS CPU 2, how does the CCS computer directly or

indirectly store the code in FDS CPU 2's RAM?

Dual mode, p. 185, is when both FDS computers are powered up and

running independently of each other. Tomayko states that the two CPUs'

memories are separate in this mode of operation. That may have been JPL's

nominal intention, but the practical needs of the mission would supersede

this convention when necessary. During Voyager 2's flybys of

Uranus and Neptune, the primary FDS CPU (FDS-A) compressed the image data

and the secondary CPU (FDS-B) packaged up the data (and other science and

engineering data) for downlink to the ground. I think it would have been

most efficient for the FDS-B to directly access the compressed data in

FDS-A's RAM, although neither Tomayko nor others describe the exact

mechanism for doing so.

Tomayko's report was published in 1988 in between the 1986 Uranus and

1989 Neptune encounters and he may have written the Voyager section

much earlier. The roles of FDS-A and FDS-B in image data compression

is described in McLaughlin and Wolff,

1985. Cummings, 2025, spoke of an FDS

subroutine, COPY, for copying data from memory A to memory B on

Voyager 1, although there is no indication that COPY

was used on Voyager 2 for transferring the compressed

image data from FDS-A to FDS-B (slide/page 35 in PDF,

51:28

in video).

The FDS is not the fastest CPU on or off the planet:

FDS processing speed (1008 machine cycles per 2.5 ms) (p. 4)

...

While faster image readout rates are both desired and recoverable at the

downlink signal levels available in the extended mission, FDS processing

speed constrains the compressed image readout rate to no faster that 1

image per 4 min, the same as was used at Saturn. At this rate the FAST

compressor in FDS software is compressing 2666 2/3 pixels per second.

(p. 8)

Michael G. Urban,

"Voyager

Image Data Compression and Block Encoding", 1987

International Telemetering Conference, October 26-29, 1987,

San Diego, California.

(Thanks to

LouScheffer

for pointing me to this paper.) The clock rate works out to 403.2 kHz;

if instructions average 5 cycles (e.g., add is 6), that is consistent with

Tomayko's figure of 80,000 instructions per second.

Two sources of information about the FDS computer for Tomayko were the

designer himself, Jack Wooddell, and a paper written by Wooddell, "Design of

a CMOS Processor for Use in the Flight Data Subsystem of a Deep Space Probe":

42. ... Undated at the time, Wooddell thinks he wrote the "Design of

a CMOS Processor for Use in the Flight Data Subsystem of a Deep Space

Probe" in 1974, which makes sense as the development process lasted

from 1972 to 1974.

James E. Tomayko,

Computers in Spaceflight: The NASA Experience, 1988

p. 338,

Source

Notes, Chapter Six.

Steven Pietrobon obtained

a copy of Wooddell's paper from Wichita State University, which houses Tomayko's

Collection

of NASA Documents.

(Dr.

Tomayko was a professor at Wichita at the time he wrote Computers in

Spaceflight and other reports for NASA.)

Note that the paper addresses a "feasibility design" and was written

in 1974, three years before the Voyager launches. Since changes may

have been made in the interval, the paper may not be a totally accurate

description of the final, as-built FDS computer.

The FDS CPU's 16-bit

instruction

set is very spartan, which probably contributes to its low component count

and low power consumption: 326 integrated circuits for the CPU alone, not

including RAM and, I presume, the interfaces to external devices such as

the science instruments and the Digital Tape Recorder. There are 3 hardware

registers (A, B, and C) that are probably built in to the CPU. Tomayko's

128 "special registers" comprise 16 general-purpose registers, 31 memory

pointers, 7 index registers, and 74 counters. These register are "special"

because they're not an integral part of the CPU and instead reside in memory,

the last 128 words of the lower 4K memory bank. Two advantages of this scheme

are (i) a lower component count at the cost of 128 words of RAM and

(ii) frequently used "registers" can be addressed with a 7-bit or shorter

register ID (depending on the group) rather than a 12-bit memory address, thus

producing more concise instructions/code. A big disadvantage is that an

instruction involving a special register requires, aside from the instruction

fetch, a read and/or write to memory, which imposes a performance penalty.

The FDS uses 12-bit addresses to address 4K words of memory. Tomayko's

external address bits — set/reset with OUT

instructions — provide separate 13th

address bits that select the lower and upper 4K of memory for,

respectively, instruction fetches and data reads/writes. A couple of

questions come to mind:

To access the special registers in memory, is the

13th data address bit

temporarily forced to 0? This seems necessary.

When the timer interrupt occurs, are both the instruction and data

13th bits reset to a known state? This

seems necessary so that the interrupt handler at 0x0000 (instruction

address bit: 0) is invoked and with its correct RAM (data address

bit: 1?). (See the description of FDS timer interrupts and P periods

in the Performance Constraints section.)

The FDS is truly a

reduced

instruction set computer, but without the speedy instructions! This is

especially apparent in the computer's primitive subroutine mechanism, which

Pietrobon fortunately explains. The CPU has no call stack and there is a

single jump instruction (but with multiple assembly mnemonics). JMP

saves the program counter (pointing to the next instruction) to hardware

register B and then jumps to the designated 12-bit memory location. This

behavior allows JMP to function as both a regular goto and a

subroutine call. A second mnemonic, JMS, has the same 4-bit

opcode (0) and does the exact same thing, so it is just an alias for

JMP. Pietrobon was not sure why there was a second mnemonic.

My guess is that the programmers use it to distinguish subroutine calls

from gotos in the assembly source code. A simple disassembler would

decode both as JMPs; a more sophisticated disassembler could

possibly examine the instruction(s) at the jump target address and

deduce whether it's a JMP or a JMS.

Okay, a subroutine is called using JMP/JMS. How does

the subroutine eventually return back to the caller? There is no instruction

that returns to the address in register B. Instead, the return is accomplished

with a dynamically generated JMP instruction. The machine code for a

JMP instruction is 0x0TTT, where the upper 4 bits are the opcode (0)

and the lower 12 bits are the target address. When entered, a subroutine

must perform an SRB to save the 12-bit return address in B to a

16-bit memory word; the upper 4 bits are zero, which makes the saved address

a JMP instruction. Executing the saved address/JMP returns

control back to the caller. An implicit call stack is formed by each

subroutine storing its return address in its own local storage, thus

allowing nested, non-recursive subroutine calls.

There are two methods for executing the saved address/JMP that

returns from a subroutine to its caller. Which method to use depends on

the configuration of the memory banks. The two techniques are described

later in the examples of FDS flight software,

Corrupted Memory Block and

Set CMODE from MODE, respectively.

Getting back to Voyager's computers in general, don't be dismayed by the

CCS and AACS CPUs' lack of multiply and divide instructions and the FDS

CPU's slow arithmetic operations. Spacecraft computers don't crunch

numbers. (Out of necessity, in my ground-software experience up to the

mid-2000s. More modern flight computers with more powerful processors and

abundant RAM may have changed this dynamic.) Sensors and detectors return

unit-less raw counts. If these readings are needed in real-time for

autonomous spacecraft operations, it is faster and more memory-efficient

for command sequences or flight software to work with these counts

directly. Otherwise, the ground system can apply its sizeable CPU, RAM,

and disk resources to perform what are called engineering unit

conversions; e.g., the conversion of a hypothetical temperature

sensor's raw 0-255 count to degrees Celsius. The FDS computer is focused

on assembling and formatting science and engineering data for transmission

back to Earth, so fast bit and byte manipulation is more important than

fast arithmetic operations. (Which I learn from Pietrobon are not quite

so fast!)

Memory Constraints

Wise words:

The Voyager flight software design was very heavily impacted by the

limited memory space. Extensive effort and ingenuity was required

to perform the necessary functions in the available space. The

flight software was written in assembly code.

Assessment of Autonomous Options for the DSCS III Satellite

System, 1981

Prepared for the U.S. Air Force by JPL personnel (Donna L. S. Pivirotto

and Michael Marcucci?),

"Volume III:

Options for Increasing the Autonomy of the DSCS III Satellite" (PDF),

p. 179, Aug. 6, 1981.

Words that annoy me:

The computers aboard the Voyager probes each have 69.63 kilobytes of

memory, total.

—Adam Mann,

"Interstellar

8-Track: How Voyager's Vintage Tech Keeps Running", Wired,

September 2013.

An otherwise good article, but ... First, I'm old-school, so the use of

"kilobytes" in the modern 1,000 sense annoys me. Second, the pointless

(and truncated) precision of 69.63 is annoying. Third, the sentence is

wrong because of the confusing wording. The computers don't each

have that much memory. The sum total of memory for all 3 computers and

their 3 backups is 69,632 bytes:

CCS - 4,096 18-bit words = 73,728 bits = 9,216 bytes

AACS - 4,096 18-bit words = 73,728 bits = 9,216 bytes

FDS - 8,192 16-bit words = 131,072 bits = 16,384 bytes

------

34,816 bytes

x 2 for the backup computers

------

Total for all 6 computers: 69,632 bytes

When examining the memory limitations from a programming standpoint, expressing

the memory size in bytes can be misleading. The CCS computer, for example,

has 9,216 bytes of RAM. Readers may mistakenly infer from that figure

that there are 9,216 addressable code/data units when, in fact, there are

only 4,096 addressable code/data units, a significantly lower number that

more accurately reflects the limits in which the programmers had to work.

For example, if you want a variable that counts from 1 to 10, you can't use a

memory-efficient single byte and must instead use 2¼ bytes (18 bits).

Yes, you can pack multiple data items into an 18-bit word and I'm sure the

Voyager assembly language programmers did just this, but doing so incurs

added memory/performance costs for packing and unpacking the individual data

items. And, yes, clever coding can reduce those costs and or even avoid the

need for packing/unpacking altogether. Which makes a strong case for using

assembly language rather than a higher-level language like Fortran 5!

A given computer's memory holds the flight software code (CPU instructions),

the software's dynamic variables, and uplinked data (tables, command sequences,

and such). Regarding the AACS, Dr. Tomayko wrote (emphasis added by me):

The programmers must have done an outstanding job, considering the slow

processor and limited memory. At launch, only two words of free space

remained in the 4K of plated wire. Tight memory is now a problem

because the scan platform actuators on Voyager 2 are nearly worn out,

and software has to compensate for this during Uranus and Neptune

encounter periods.

James E. Tomayko,

Computers in Spaceflight: The NASA Experience, 1988

Chapter

Six, Section 2, p. 178.

(Interestingly, Tomayko's 1988 report was published after the Uranus encounter

and before the Neptune encounter! He was writing not a history of the distant

past, but an account of a still very much active and prominent project.)

A big chunk of Voyager 2's CCS memory is consumed by the

Backup Mission Load (BML), identified

by name in Matsumoto's paper and described by Tomayko as follows:

As pioneered on Mariner X, a disaster backup sequence was stored in the

Voyager 2 CCS memory for the Uranus encounter, and later for the Neptune

encounter. Required because of the loss of redundancy after the primary

radio receiver developed an internal short, the backup sequence will

execute minimum experiment sequences and transmit data to earth; it

occupies 20% of the 4K memory.

James E. Tomayko,

Computers in Spaceflight: The NASA Experience, 1988

Chapter

Six, Section 2, p. 176.

In other words, if Voyager 2 loses the ability to receive

commands from the ground, the BML ensures that, on its own, the spacecraft

will continue to perform science operations and to downlink science and

engineering data. The original BML (or an early BML) covered a long

period of operations:

Since the failure of one of the receivers on Voyager 2, the spacecraft

has had a "back-up" mission sequence stored in its CCS occupying roughly

one-half of the available sequence memory. Affording protection of limited

mission return in the event of a second receiver failure, and thus

loss of commandability, the back-up mission sequence of Voyager 2 will

control the spacecraft for about 4-1/2 years (until early in 1986) and

includes in it a sequence for a Uranus flyby encounter.

Christopher P. Jones and Thomas H. Risa,

"The Voyager

Spacecraft System Design" (abstract), 1981

pp. 7-8, American Institute of Aeronautics and Astronautics (AIAA),

16th Annual Meeting and Technical Display

on Frontiers of Achievement, May 12-14, 1981, Long Beach, California.

(doi:10.2514/6.1981-911)

Fault protection is very important in a remote unmanned spacecraft and is,

incidentally, one of Sun Kang Matsumoto's areas of expertise. In the 1981

Assessment of Autonomous Options for the DSCS III Satellite

System, Volume III, we learn that 8 CCS

fault routines take up 26.5% of the CCS memory (Table B-3, p. 176) and

9-plus AACS fault routines take up 19.4% of the AACS memory (Table B-4,

p. 180). This suggests that nearly half of Voyager 2's

CCS memory was devoted to the fault routines (26.5%) and the

BML (20%), leaving only a little more than half of the

memory, about 2,200 words, for other CCS functions such as

command sequence storage and processing:

Fault S/W BML Everything Else!

Graph of CCS memory allocation (not a diagram of its layout).

This seems hard to believe and the sizes could well have changed up or down

over the decades since this 1981 study and Tomayko's 1988 report.

A couple of weeks after I wrote the above, I came across a 1987 paper by the

Voyager Flight Operations Manager, published between the Uranus and Neptune

encounters. This paper seems to indicate that, on Voyager 2,

there was even less memory available for sequence storage and processing,

about 1,250 words:

Out of the total CCS memory, the fault recovery routines, other fixed

routines, and flight reserve leave only about 2,500 words available for

sequencing of preplanned activities. These activities involve spacecraft

maneuvers, scan platform movements, data mode changes, data recording or

playback, scientific observations, etc.

...

Since the failure of one of the Voyager 2 command receivers in 1978, that

spacecraft has had a back-up sequence stored in its CCS that occupies

approximately one-half of the available sequencing space. In the event of

a failure of the remaining receiver, this back-up sequence will execute

until late in 1989, allowing for limited mission return including

data-taking during the Neptune encounter.

Terrence P. Adamski,

"Command and Control

of the Voyager Spacecraft" (abstract), 1987

p. 3, American Institute of Aeronautics and Astronautics (AIAA),

25th AIAA Aerospace Sciences Meeting, March 24-26, 1987, Reno, Nevada.

(doi:10.2514/6.1987-501)

(And a couple of more weeks later, I found the exact number: 1,239 words

per CCS computer! See Morris's 1986 paper, p. 173.)

The "fixed routines" would probably have included the core software such

as the executive discussed below, interrupt handlers, communications with

the AACS and FDS computers, ground communications, etc. At the beginning

of the Voyager Interstellar Mission in 1990, a BML was installed on

Voyager 1 too (see Matsumoto's paper, p. 3). It and probably

an updated Voyager 2 BML would have been tailored to the

post-planetary-encounter, interstellar mission. (I suspect that

Voyager 2's original BML, intended for the Uranus and Neptune

encounters, could have been reduced in scope and size afterwards.)

I couldn't find much detailed information about the actual flight software.

Both Tomayko and the Autonomous study describe the

cyclic executive

used on the AACS computer to schedule routines (functions) in regularly-spaced

time slots:

A flow chart of the AACS flight software is shown in Figure 4-14 in

the main body of this report. Normal program execution occurs in

three different rate groups having periods of 10 ms, 60 ms, and 240 ms.

The fourth rate group shown (20 ms) was used only for the Propulsion

Module operation. Functions requiring high rates such as thruster

activation and scan platform stepper motor operations are performed

by the 10 ms logic. The bulk of the attitude control functions, such

as attitude sensor 'reads' and control law algorithms, are accomplished

by the 60 ms logic. The 240 ms logic performs a variety of tasks that

do not require the higher execution rates, such as decoding CCS commands

from the input buffer, fault monitor and correction, and "power code"

processing.

Assessment of Autonomous Options for the DSCS III Satellite

System, 1981

Prepared for the U.S. Air Force by JPL personnel (Donna L. S. Pivirotto

and Michael Marcucci?),

"Volume III:

Options for Increasing the Autonomy of the DSCS III Satellite" (PDF),

p. 179, Aug. 6, 1981.

In a more visual form, here are the 10-ms time slots and the 60- and 240-ms

intervals:

.-- 10 ms

v

| | | | | | | | | | | | | | | | | | | | | | | | |

| <- 60 ms -> | | | |

| <- 240 ms -> |

Difficult-to-update, hand-drawn flowcharts are a thankfully retired relic

of the 1970s software industry and I provide here a pseudocode version of

the scheduling algorithm on the AACS. It is not apparent from the

Autonomous study's flowchart, but I do make an assumption

that the 60-ms routines, for example, are not all run in a single 10-ms

time slot every 60 ms. Instead, the per-slot load is lessened by

distributing them over the six 10-ms time slots in the interval. So,

given 60-ms routines ABC and DEF, then

ABC might be run in the 10-ms slots 2, 8, 14, 20, 26, ... and

DEF might be run in slots 5, 11, 17, 23, 29, etc.

Offset60 = 0

Offset240 = 0

EVERY 10 ms DO {

Run all of the 10-ms routines.

Run any 60-ms routines scheduled for time slot Offset60.

Increment Offset60. If 6 then reset Offset60 to 0.

Run any 240-ms routines scheduled for time slot Offset240.

Increment Offset240. If 24 then reset Offset240 to 0.

}

Within a time slot, all the scheduled routines must be sure to finish before

the start of the next time slot. The programmers must quantify beforehand

the worst-case execution times for each of the routines and ensure that the

sum does not exceed 10 ms. The programmers probably are counting instruction

cycles for the assembly statements in each routine. (I use the present tense

because this would have been done for the routines prior to the 1977 launch

and still needs to be done for routines updated/uploaded nearly 50 years

later.) If the time exceeds 10 ms and offloading some 60-ms and 240-ms

routines to other time slots is not a possibility, the programmers must

speed up the routines. This is most easily done working directly with the

assembly language. It would be awkward to do this in a higher-level language

because the quanitification still has to be done at the assembly language

level.

Both Tomayko and Autonomous also mention the FDS computer's

P periods, with Tomayko providing a little more detail. With 2.5-ms

time slots, the execution constraints were even more stringent than those

on the AACS. There is no indication of whether or not the FDS had multiple

levels of intervals like the AACS. And apparently the CCS software was also

built on a cyclic executive?

Like the command computer, the data computer has a simple executive. Time

is divided into twenty-four 2.5-millisecond intervals, called "P periods."

Each 24 P periods represent one imaging system scan line. Eight hundred of

those lines is a frame. At the beginning of each P period, the software

automatically returns to memory location 0000, where it executes a routine

that determines what functions to perform during that P period. Care is

taken that the software completes all pending processes in the

2.5-millisecond period, a job made easier by the standardization of

execution times once the direct memory access cycle was added.

James E. Tomayko,

Computers in Spaceflight: The NASA Experience, 1988

Chapter

Six, Section 2, Box 6-3, p. 185.

Unrelated to performance concerns, the use of an executive proves the truth

in Richard Rice's wry quip:

Rice characterized the unique nature of the data computer software

this way: "We didn't worry about top-down or structured; we just

defined functions."

James E. Tomayko,

Computers in Spaceflight: The NASA Experience, 1988

Chapter

Six, Section 2, pp. 183-184.

The executive provided the top-level structure, so the programmers really

were just writing functions to be called when their time slot came up!

The Voyager team takes a break from worrying

about bit flips and decreasing RTG power! Left to right: Lu Yang,

Todd Barber, Sun Matsumoto, Enrique Medina, and Jefferson Hall.

The picture is from the movie,

It's Quieter in the

Twilight, about 45-50 minutes in, right after the camera

panned away from Chris Jones and Roger Ludwig on the right side of

the table. (Source: JPL's internal newsletter,

Universe,